Every computer system has its own logging function. The log keeps careful, detailed records of every event that takes place within a particular application. So, when an event occurs, the event logger automatically produces a record of the event. In addition to simply recording the occurrence, it also notes all the pertinent conditions at the time the event took place. All this data goes into the official record, and that record is called a log file.

Who uses these important caches of data? Typically, programmers use the information to debug computers and find out why errors occurred in the first place. But in addition to programmers and other high-level technicians, these files come in handy for anyone who wants to check on system performance, see current security status and gain insights into overall application behavior. An entire area of study has come into being around this field of collecting, analyzing, and monitoring logged data. It’s called log management and it consists of three distinct sub categories. Here’s a summary of the three topics that competent log management software addresses.

The Aggregator

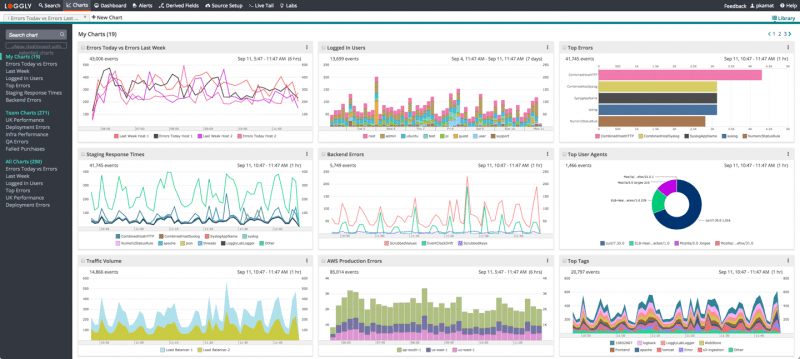

IT environments are growing more complex by the day, and highly sophisticated applications are needed to manage huge amounts of information created by computer processes. Managers need to make sure that they have a centralized way to monitor the performance and security of all applications. In fact, it is possible to review each app’s log file in order to keep track of its status. A much more efficient approach is to collect all the data into a single location on just one platform. An aggregator does this task. Loggly’s aggregation software is capable of bringing all the information together, no matter where it was originally located in the infrastructure of the IT environment. After delivering it to a single platform, it’s much easier for humans to look at it, mull it over, do in depth analysis on it and review it for however long they desire.

Analysis

Within medium and large organizations, different software programs and human managers perform the separate functions of analysis, aggregation, and monitoring. When it comes to analyzing detailed data, human technicians tend to look for the same types of patterns, like slow query time, over loaded memory, high CPU usage and looped processes. However, analysis can take many forms and has a wide range of uses. A competent software program or well-trained technician discover system wide flaws by doing routine scans of the logs. An in-depth examination can lead to permanent solutions to problems that caused slowdowns, system wide outages and other critical issues.

Monitoring

Within a vast IT environment, monitoring can act as a preventive measure. Programs that do high-level observation of logs can spot small problems before they become big ones. For example, a monitored memory will never crash as long as all its issues are addressed in real time. Techs typically set alert levels on factors like critical memory levels, CPU usage, unauthorized attempts to download sensitive files, and other common areas of concern.